I had the opportunity to do a bit of SEO forensic analysis this week on a web site that had been previously optimised by several different SEO companies over a period of a few years.

I had the opportunity to do a bit of SEO forensic analysis this week on a web site that had been previously optimised by several different SEO companies over a period of a few years.

The owner had noticed a dramatic drop in rankings the last few weeks and told a friend who then contacted me.

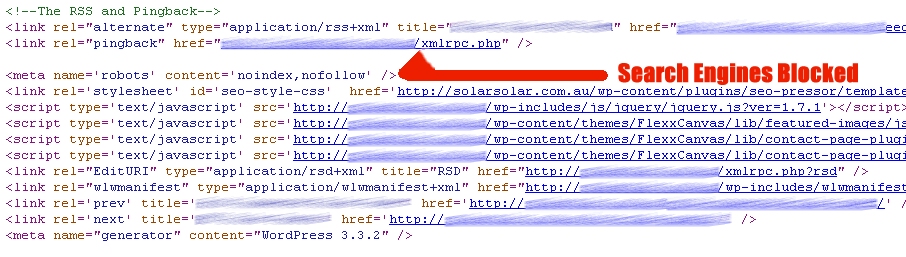

I thought I’d have a quick look at the page source code and the robots.txt file on the server.

Sure enough, in the header of the home page (see image below), was a line of code that tells all the search engines not to index the site, nor follow any links on the page.

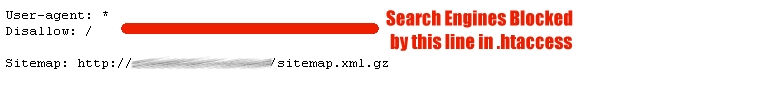

Similarly, there was a line of code in the robots.txt file (see image below), in the root directory of the web server that also blocks the search engine spiders.

And I also suspect that there is a line of code in the .htaccess file that also blocks the search engines. If I had access to the hosting I could check this.

As far as search engines are concerned, this web site is “Dead, buried and cremeated” as Tony Abbott would say.

Whoever made these changes really wanted to screw this business over.

To me, it’s bordering on criminal that a local Brisbane SEO or web design company would go to these lengths to get even with a client who left them or didn’t pay their bill.

If you have recently had a dispute with, sacked an SEO or web design company and want to check that your web site is still OK, here’s 2 tools you can use:

1. To check your page metatags you will need to view the source code and do a search on the word noindex. If you see the word noindex in the source code, be very suspicious. To view your page source code, right-click on the page then select view source.

2. To check you robots.txt file, you can either enter the address straight into your browser like this example: http://searchenginecourse.com.au/robots.txt or there is a nice little tool here: http://phpweby.com/services/robots

3. To check you .htaccess file you will need to get access to the root directory of your web host via FTP or cpanel, find the file, and have a look inside it. Look for a line that starts with deny from followed by an IP address. It looks like this:

deny from 216.55.45.66

One of those addresses being blocked may be Google. Here’s the current list of Google IP addresses.

The good news is that if this blocking code is removed the web pages will bounce back in the search results in just a few days.

Until next week.